Load Balancing Azure OpenAI using Application Gateway

March 2024 Update: This post shares one out of the five ways to address Azure OpenAI’s Tokens per Minute (TPM) limitations. To learn all five approaches, check out my latest post.

In my previous post, I shared best practices on addressing Azure OpenAI token limits, which included a section on using multiple Azure OpenAI instances distributed across regions, accessed through a load balancer.

Azure API Management (APIM) is a popular option for this purpose. It not only assists with Azure OpenAI token limits, but also provides a comprehensive solution for managing and monetizing multi-cloud APIs. However, using APIM solely as an OpenAI load balancer may be costly. Hence, in this post, I will explain an alternative approach using Azure Application Gateway (AppGW).

If you think APIM is more suitable for your organization, see Load Balancing using Azure API Management.

For simplicity, I will provide steps to configure a public AppGW to multiple public Azure OpenAI endpoints. The process for making it fully private will be similar, with additional virtual network configurations needed.

Also, while this post is about load balancing Azure OpenAI, the same will apply to any of the other Azure Cognitive Services.

Why not Azure Traffic Manager or Azure Load Balancer?

Azure Traffic Manager does not support SSL offloading, which is required. Azure Load Balancer does not work with private endpoints, which is required for Azure Cognitive Services virtual network integration.

Alternatively, Azure Front Door is a viable solution. The configuration is similar to what is shared in this post.

Azure Application Gateway Configuration

Azure Application Gateway (AppGW) is a web traffic load balancer that enables you to manage traffic to your web applications. It can be deployed as a standard load balancer or a Web Application Firewall (read full documentation.) Since Azure Cognitive Services such as Azure OpenAI are API endpoints, AppGW can be used to load balance between multiple Azure OpenAI services.

flowchart LR

A(Client) -.- B

subgraph AppGW[Application Gateway]

B[Frontend IP] -.- C[HTTP/HTTPS<br />listener]

C --> D((Rule))

SPACE

subgraph Rule

D <--> E[/HTTP Setting/]

end

end

subgraph Azure OpenAI

Rule --- F[East US<br />api-key: 30235...]

Rule --- G[France Central<br />api-key: 14jdu...]

Rule --- H[Japan East<br />api-key: 53af6...]

Rule --- I[etc]

end

style SPACE fill:transparent,stroke:transparent,color:transparent,height:1px;

The challenge is how to configure it (this took me a while.) Here are the settings that worked.

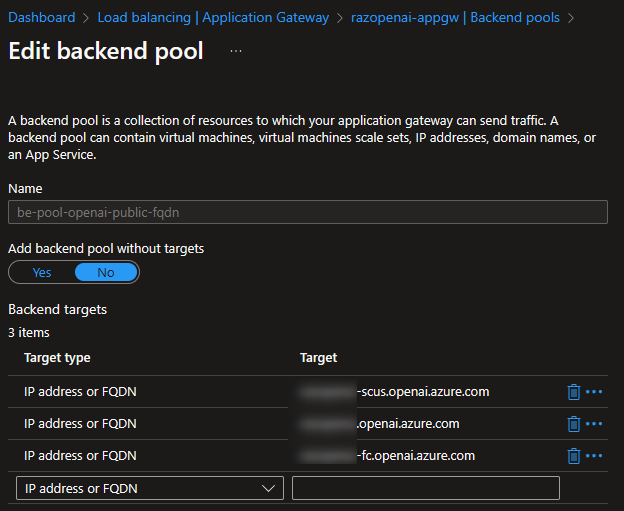

Backend Pool and Backend Settings

The backend pool is straightforward. Specify the FQDN of every Azure OpenAI endpoint.  Azure App Gateway: Backend Pools

Azure App Gateway: Backend Pools

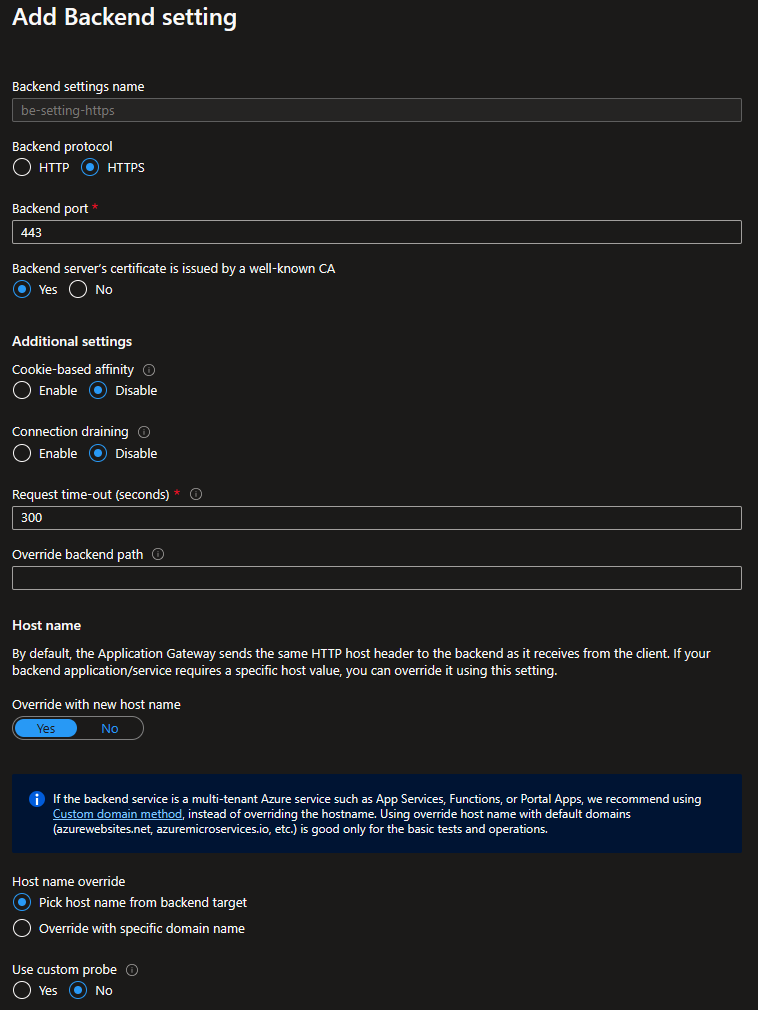

Since the backend pool are public HTTPS endpoints, choose the HTTPS protocol (issued by a well-known CA).

Azure App Gateway: Backend Setting

Azure App Gateway: Backend Setting

Other Settings

- Cookie-based affinity (Disabled): to properly load balance all requests, round-robin.

- Request time-out (300 seconds): this is the time you expect Azure OpenAI to respond before it times out. From experience, longer responses (higher tokens) take longer to respond, hence setting this to 300 seconds (for now).

- Override with new host name (Yes): Azure OpenAI seem to require its hostname to retain as

*.openai.azure.com, this setting fixed it. - Host name override (Pick host name from backend target): to pass the exact hostname of the backend pool.

- Use custom probe (No): For now, since we haven’t created the custom probe yet.

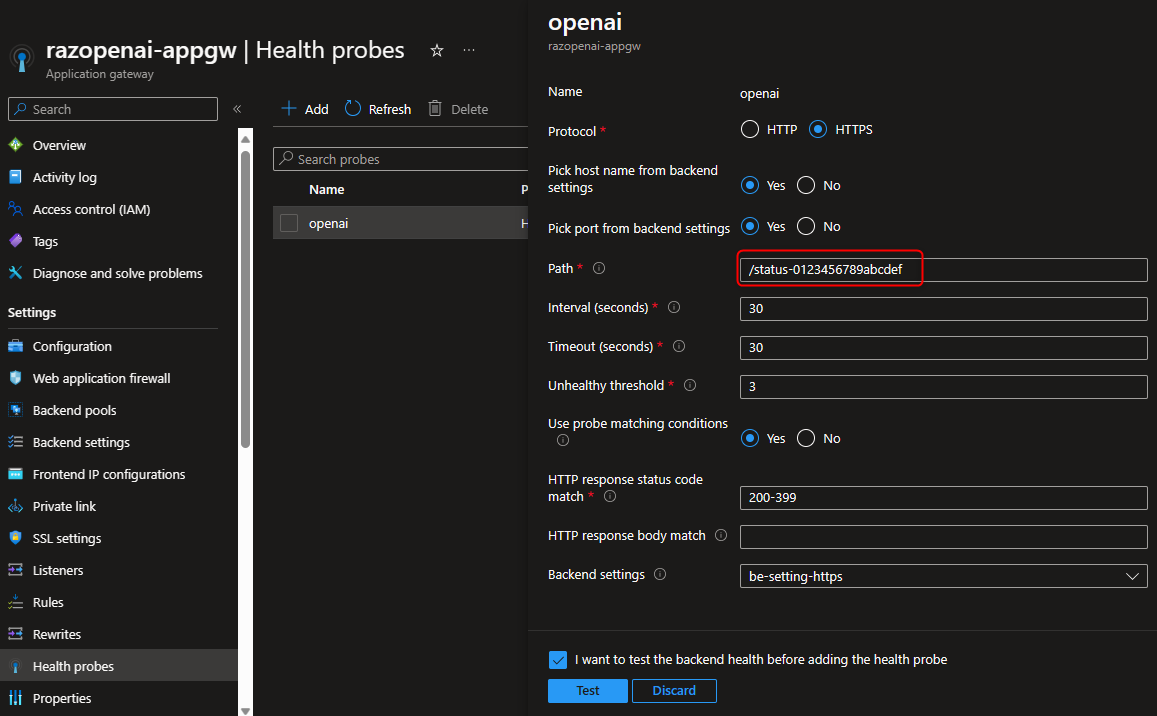

Health Probes

AppGW will monitor the health of all backend pool resources and will automatically remove any resource that is considered unhealthy. Because Azure OpenAI does not have a default page to its endpoints, AppGW will by default consider every instance unhealthy. Therefore, creating a custom health probe is required.

Azure App Gateway: Custom Health Probe

Azure App Gateway: Custom Health Probe

The custom health probe path is /status-0123456789abcdef (a magic path which I found somewhere while searching around.) Test and see if all backend pools are healthy.

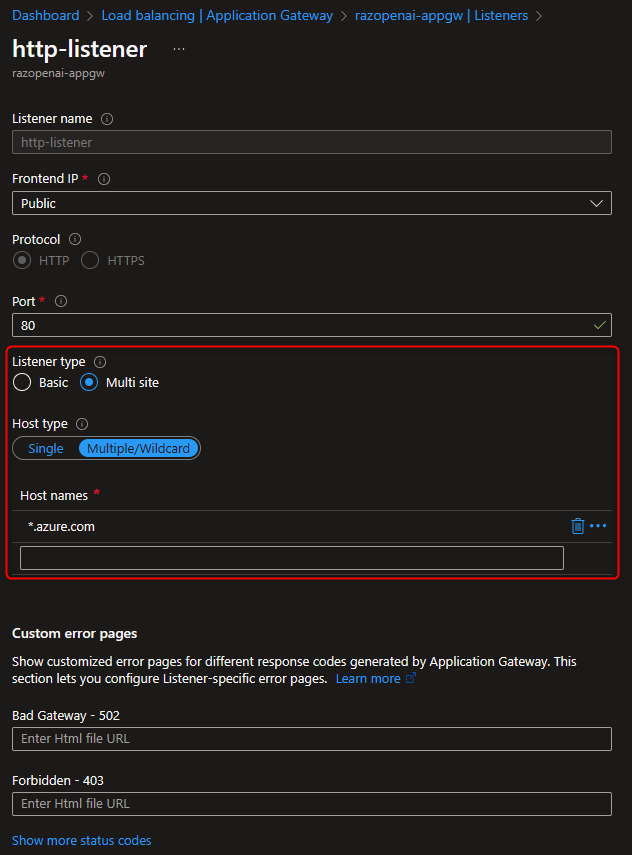

Listeners

To simplify this proof-of-concept, I used an HTTP public listener.

Rules

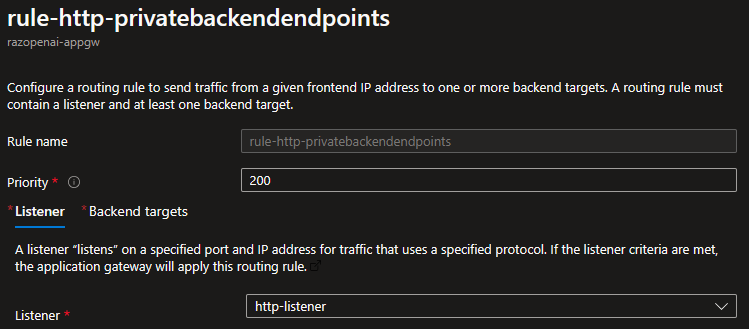

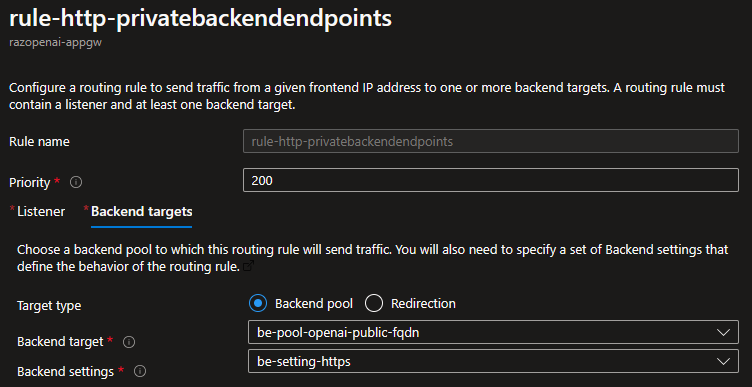

Rules is where we stitch all these settings together.

Azure App Gateway Rules: Listener

Azure App Gateway Rules: Listener

Azure App Gateway Rules: Backend Targets

Azure App Gateway Rules: Backend Targets

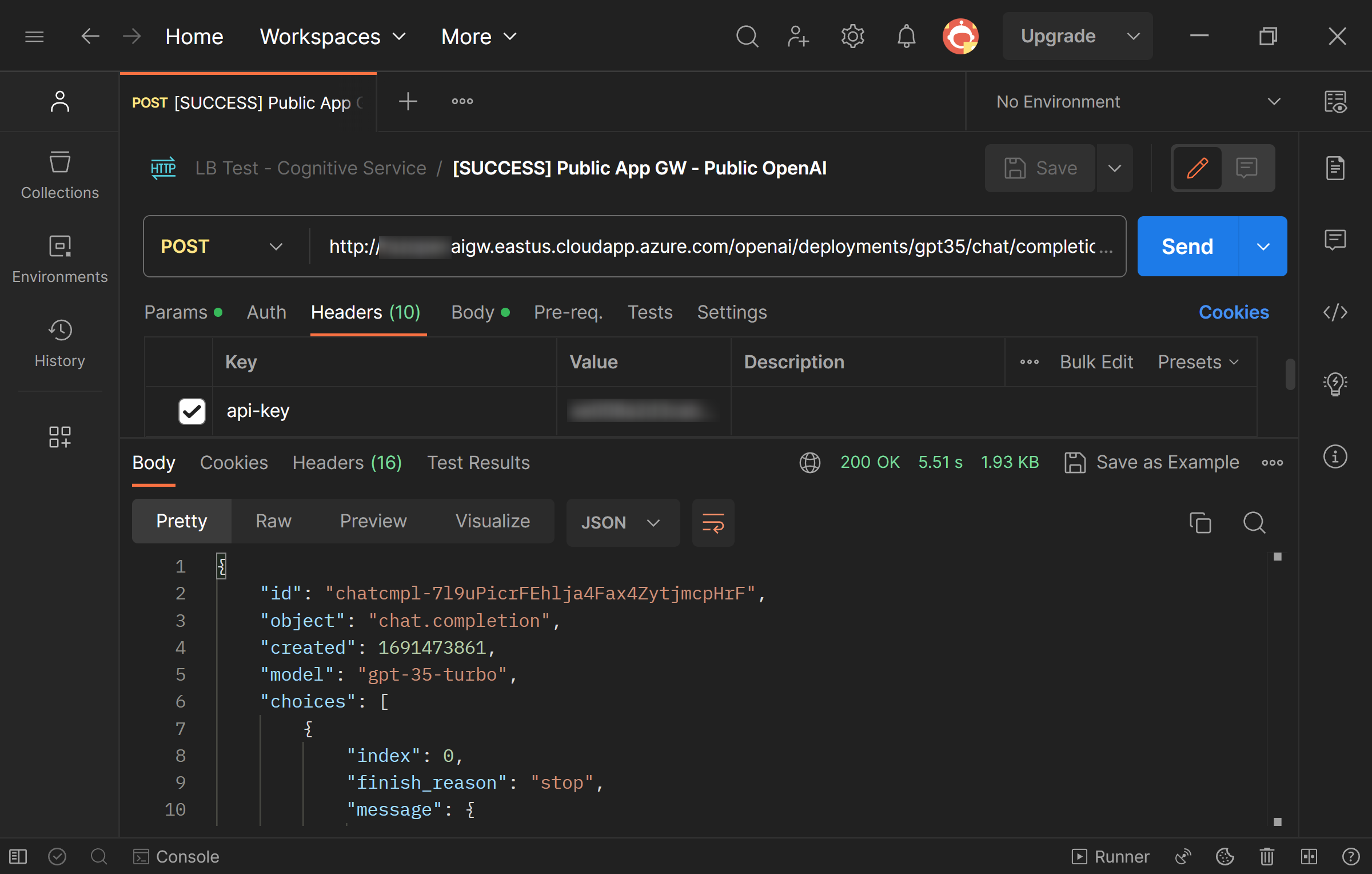

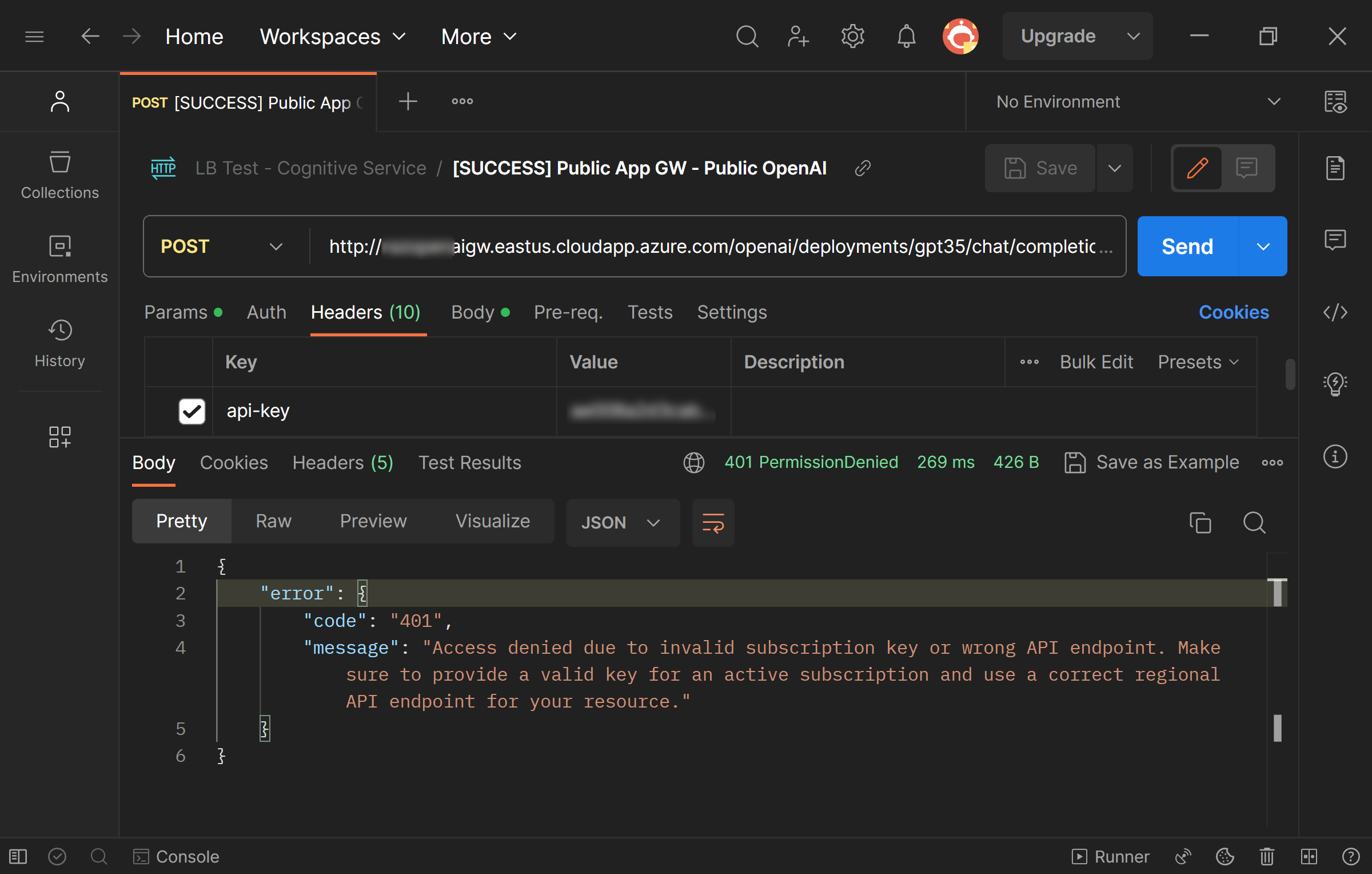

Testing using Postman

The simplest way to test is using Postman. Simply call the API multiple times and expect it to succeed occassionaly. For example, I have set my AppGW to have three Azure OpenAI endpoints in its backend pool. So in using a specific key (e.g. East US), I expect my POST to have 1 successful call followed by 2 access denied calls.

Testing: Successful Call (1 out of every 3 calls)

Testing: Successful Call (1 out of every 3 calls)

Testing: Access Denied (2 out of every 3 calls)

Testing: Access Denied (2 out of every 3 calls)

This result shows that load balancing is working. As each Azure OpenAI endpoint requires a different api-key, we expect the manual calls to work only when the load balancer hits that specific endpoint.

For application integration, we need a way to authenticate without using the api-key.

Keyless Authentication for Code using Microsoft Entra ID (formerly Azure AD)

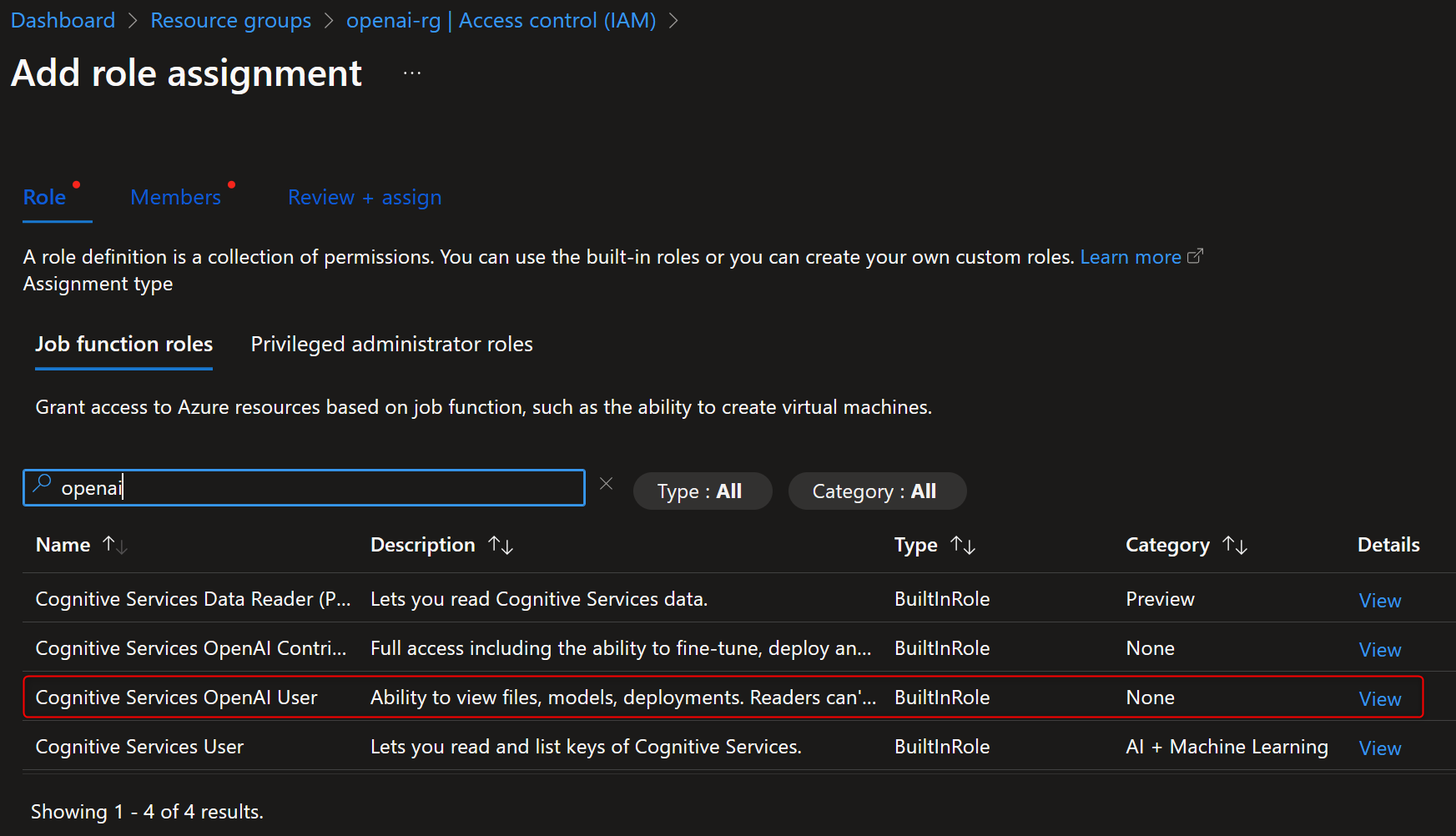

The good news is that OpenAI SDK, LangChain, and Semantic Kernel all supports Azure AD integration. The first step is to give the application (via Managed Identities or Service Principal) and/or the developer/engineer the Cognitive Services OpenAI User role (see how-to.)

Azure OpenAI Role-based Access (RBAC)

Azure OpenAI Role-based Access (RBAC)

After doing so, you can try any one of these code snippets (or in a different language that these SDKs support.)

Alternative Method: AppGW Rewrite Rules

Azure Application Gateway also supports Rewrite Rules which can inject the headerapi-keydepending on the backend. This solution is possible but requires security validation.

Azure AD (Keyless) Authentication using OpenAI SDK

1

2

3

4

5

6

7

8

9

10

11

12

13

14

import openai

from azure.identity import DefaultAzureCredential

azure_credential = DefaultAzureCredential()

ad_token = azure_credential.get_token("https://cognitiveservices.azure.com/.default")

openai.api_type = "azure_ad"

openai.api_key = ad_token.token

openai.base_url = OPENAI_API_BASE

openai.api_version = OPENAI_API_VERSION

completion = openai.Completion.create(engine="davinci", prompt="Tell me a joke.", max_tokens=20)

print(completion.choices[0].text)

Azure AD (Keyless) Authentication using LangChain

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

from azure.identity import DefaultAzureCredential

azure_credential = DefaultAzureCredential()

ad_token = azure_credential.get_token("https://cognitiveservices.azure.com/.default")

from langchain.chat_models import AzureChatOpenAI

from langchain.chains import ConversationChain

chat = AzureChatOpenAI(

openai_api_type="azure_ad",

openai_api_key=ad_token.token,

openai_api_base=OPENAI_API_BASE,

openai_api_version=OPENAI_API_VERSION,

deployment_name=CHAT_DEPLOYMENT,

max_tokens=100,

temperature=0.3

)

conversation = ConversationChain(llm=chat)

response1 = conversation.predict(input="What is the difference between OpenAI and Azure OpenAI?")

response2 = conversation.predict(input="What are other LLMs?")

response3 = conversation.predict(input="Which LLM is the best?")

print(response1 + "\n" + response2 + "\n" + response3)

Azure AD (Keyless) Authentication using Semantic Kernel

1

2

3

4

5

6

7

8

9

10

11

12

13

var azureIdentity = new ManagedIdentityCredential();

var kernel = Kernel.Builder

.WithAzureTextCompletionService(

deploymentName: "text-davinci-003",

endpoint: "https://***.openai.azure.com/",

credentials: azureIdentity)

.WithAzureTextEmbeddingGenerationService(

deploymentName: "text-embedding-ada-002",

endpoint: "https:/***.openai.azure.com/",

credential: azureIdentity)

.WithMemoryStorage(memoryStore)

.Build();

Conclusion

Hope this helps!