Learning Notes | Development with Semantic Kernel (Sept 2023 Update)

The popularization of OpenAI’s ChatGPT has put the world in a rapid pace of innovations. One of which is the rapid advancements of SDKs such as LangChain and Semantic Kernel.

To integrate OpenAI with enterprise applications, I learned and experimented with two SDKs: Semantic Kernel and LangChain. This post was the beginning of my personal learning notes from this journey, which began a few months ago when OpenAI’s ChatGPT sparked a wave of innovations.

Fast forward to today, Semantic Kernel has improved significantly since I wrote my previous notes. This post reflects the latest updates and replaces my outdated notes.

Disclaimer: The views expressed in this blog are solely mine do not necessarily reflect the views of my employer. These are my personal notes and understanding I learn SK. For official information, please refer to the official documentation on Semantic Kernel.

If anything is wrong with this article, please let me know through the comments section below. I appreciate your help in correcting my understanding.

What is Semantic Kernel (SK)?

Semantic Kernel (SK) is an open-source SDK for using OpenAI, Azure OpenAI, and/or Hugging Face. SK is roughly 6+ months old (first commit was late Feb 2023), and is 4 months younger than LangChain. It currently supports C# and Python, with TypeScript and Java on the way (see supported languages.)

Built for Application Developers and ML Engineers

Although similar to LangChain, SK is created with developers in mind. SK makes it easy to build an enterprise AI orchestrator, which is at the center of the Copilot stack.

SK also supports features that ML Engineers and Data Scientists love. That is to

- chain functions together, and

- use Jupyter notebooks for experimentation. _(Note: You can use notebooks for Python and C#. For C#, use Polyglot Notebooks.)

My personal experience: I found LangChain hard to learn as an application developer. Semantic Kernel’s clear documentation and code samples made it easier for me to understand. After using SK, I could also follow LangChain’s docs better. In addition, most LangChain resources online use Jupyter notebooks and do not show how to use it in an app. SK has more examples of application code.

SK Planner: Automated Function Calling

A useful technique for using OpenAI is to generate a plan with clear steps to solve a problem. For instance:  OpenAI Prompt: Create a Step-by-Step Plan

OpenAI Prompt: Create a Step-by-Step Plan

OpenAI Output: Step-by-Step Plan

OpenAI Output: Step-by-Step Plan

Now imagine doing this in code. Given a problem input, the SK Planner can create a step-by-step plan based on the functions you specify and then execute them. See here for more info.

SK planner is very similar to LangChain Agents. The main difference is that the SK planner will create a plan from the beginning, while LangChain Agents will determine the next course of action at every step. LangChain’s approach sound better at the cost of performance and higher token usage.

AI Plugins: Semantic and Native Functions

“Plugin” is simply the term used by SK to represent a group of functions. Take a plugins directory like below:

.

├── skills # or /plugins

│ └── CalendarSkill

│ └── RetrieveAvailability # retrieve available times for a meeting

│ └── ConvertTimeZone # convert time zones

│ └── SendInvite # send meeting invite

│ └── RoomBookingSkill

│ └── [...]

│ └── MsTeamsSkill

│ └── [...]

│ └── [...]

The plugins CalendarSkill, RoomBookingSkill, and MsTeamsSkill are imported to the SK Planner. The SK planner will then check the available functions in the plugin for it to create a plan.

It is easier to think of “Plugins” as “Skills”. I am guessing that SK used the term “plugin” to align with OpenAI’s terminology

SK plugins comply with OpenAI’s specifications to make it easy to create OpenAI ChatGPT Plugins in the future (as soon as you make it to the waitlist.)

Most “plugins” will involve integration with an external service such as LLMs, databases, MS Teams, SAP, etc. But it is definitely possible to create plugins that are purely composed of functions without any external service integration (i.e. MathSkill.)

There are two types of functions that you can write with SK Plugins, semantic and native functions.

Semantic Functions

Semantic Functions are functions that are written using LLM prompts. Here are some examples:

IsValidEmail

Respond with 1 or 0, is this a valid e-mail address format:"{email}"

or

LanguageTranslator

Translate this text from {from} to {to}:"{input}"

What I absolutely love about SK is that these prompts are written in a .txt file. NO multi-line MAGIC STRINGS in the .py or .cs code! Here are useful references for creating semantic functions

- Main documentation page

- Advise on prompt engineering

- Prompt template syntax, which includes guidance on function parameters and special characters.

Native Functions

Native Functions are the traditional code functions that we are used to (see here for the details.) Below is an example of a native function getting the square root of a numerical string.

1

2

3

4

5

[SKFunction, Description("Take the square root of a number")]

public string Sqrt(string number)

{

return Math.Sqrt(Convert.ToDouble(number, CultureInfo.InvariantCulture)).ToString(CultureInfo.InvariantCulture);

}

Why create a Math skill/plugin?

LLMs are generally bad at math. To mitigate this, it is better to use proven math libraries and only use LLM for what it is good at — natural language.

Out-of-the-box (OOTB) Plugins

SK currently has a smaller set of out-of-the-box plugins compared to LangChain (remember, LangChain’s been around 4 months longer). Here are some noticable differences at this time:

- LangChain’s tools are mostly about integrating with other systems. You can define custom tools like SK native functions but not many are available out-of-the-box.

- As SK is from Microsoft, it has an OOTB plugin for Microsoft Graph.

- LangChain has more integrations with non-Microsoft services (see list).

Semantic Memory

Semantic Memory is “an open-source service and plugin specialized in efficient indexing of datasets (Source here.)”

Most actual use-cases of AI-infused applications involve processing data so that it’s ready for the LLM to use. Chunking, embedding, vector store and vector search are some of the common topics of discussion in this area. See the documentation and the repo for more information.

No Support for Chat History Persistent Storage

I would love to be corrected here (please let me know in the comment section), but it is my understanding that SK has no built in function for storing chat history in a persistent storage like a Filesystem, Redis Cache, MongoDB, or other databases. Storing the history is to be taken care of the developer separately by code.

This is an area that is handled very easily with LangChain (see my post about conversation history token management.)

Roadmap

The good news is that this space is rapidly advancing, and any limitations experienced today may no longer hold true in a few months. There were 35 merged pull requests in the last week alone.

SK Repo Insights from Aug 25 to Sep 1 2023

SK Repo Insights from Aug 25 to Sep 1 2023

In addition to the great public community contributing to these open-source projects, I believe there is a full time team who are continuously enhancing this SDK. We can see the public roadmap of SK in the repo’s GitHub projects.

Practical Development with Semantic Kernel

As mentioned repeatedly, SK is built with developers in mind. This section covers some practical components that demonstrates this.

VS Code Extension

SK semantic functions are best written using VS Code using the official Semantic Kernel Tools Extension.

VSCode Extension: Create Semantic Functions

VSCode Extension: Create Semantic Functions

VSCode Extension: Test Semantic Functions against Multiple Models (also see here)

VSCode Extension: Test Semantic Functions against Multiple Models (also see here)

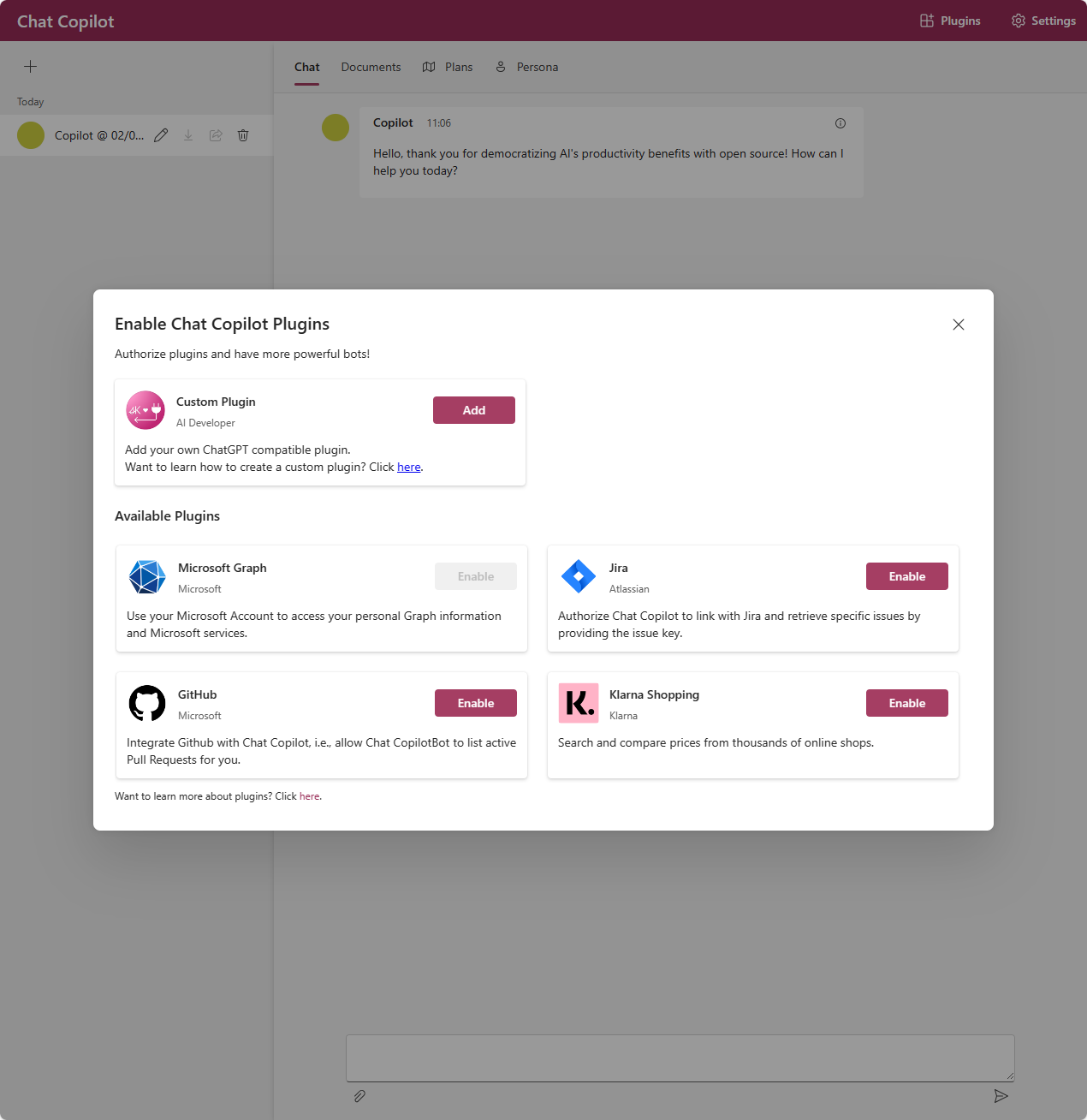

Chat Copilot Application

SK has released a Chat Copilot reference application. Unlike many ChatGPT repos out there, this sample application shows:

- a microservice deployment architecture (i.e. the frontend and backend services are separate),

- how to configure Azure AD for the frontend SPA and the backend API web service

- how to use either OpenAI or Azure OpenAI

- uploading of documents for embedding and vector DB storage (Semantic Memory implementation)

- out-of-the-box, sample and custom plugins integration.

Other Sample Apps

There are other sample apps in addition to the Chat Copilot reference application. These apps show:

- a backend orchestrator service implemented using Azure Functions, and

- a set of TypeScript/React sample apps that use the backend orhestrator.

Join the Community

To sum up, Semantic Kernel is a powerful SDK for building an AI orchestrator for your Copilot stack.

This post is written for an audience of one, myself! But I do hope that it has somehow helped you as well. As the world is rapidly innovating in this space, one of the best ways to learn and contribute is by joining the community. Here are the links:

- GitHub Repo: For logging issues, discussions and code contributions,

- Discord: For realtime chat.

To sum up, Semantic Kernel is a powerful SDK for building an AI orchestrator for your Copilot stack. In this post, I shared my personal learning notes on how to use SK for various tasks, such as creating and executing plans, writing semantic and native functions, and more. I also showed you some practical resources for developing with SK, such as the VS Code extension, the Chat Copilot app, and other sample apps.

While the current SK version is already quite capable, it is continuously innovating. If you want to learn more about SK and join its community, check out the following:

Although I wrote this primarily for myself, I hope this post has helped you understand Semantic Kernel better and inspired you to give it a try. If you have any questions or feedback, feel free to leave a comment below or contact me directly. Thanks for reading!